Morgan Shahan received her PhD in History from Johns Hopkins University in 2020. While at Hopkins, she received Dean’s Teaching and Prize Fellowships. In 2019, her department recognized her work with the inaugural Toby Ditz Prize for Excellence in Graduate Student Teaching. Allon Brann from the Center for Educational Resources spoke to Morgan about an interesting project she designed for her fall 2019 course,“Caged America: Policing, Confinement, and Criminality in the ‘Land of the Free.’”

I’d like to start by asking you to give us a brief description of the final project. What did your students do?

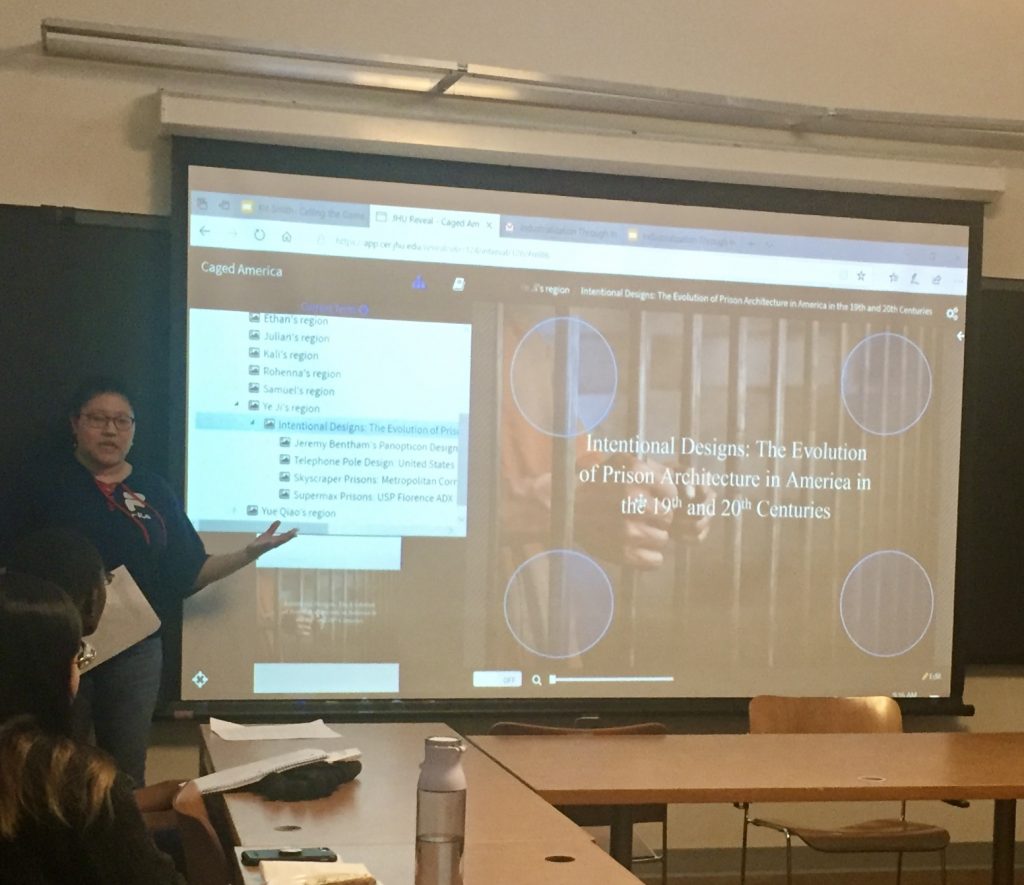

Students created virtual museum exhibits on topics of their choice related to the themes of our course, including the rise of mass incarceration, the repeated failure of corrections reform, changing conceptions of criminality, and the militarization of policing. Each exhibit included a written introduction and interpretive labels for 7-10 artifacts, which students assembled using the image annotation program Reveal. On the last day of class, students presented these projects to their classmates. Examples of projects included: “Birthed Behind Bars: Policing Pregnancy and Motherhood in the 19th and 20th Centuries,” “Baseball in American Prisons,” and “Intentional Designs: The Evolution of Prison Architecture in America in the 19th and 20th Centuries.”

Can you describe how you used scaffolding to help students prepare for the final project?

I think you need to scaffold any semester-long project. My students completed several component tasks before turning in their final digital exhibits. Several weeks into the semester, they submitted a short statement outlining the “big idea” behind their exhibitions. The “big idea statement,” a concept I borrowed from museum consultant Beverly Serrell, explained the theme, story, or argument that defined the exhibition’s tone and dictated its content. I asked students to think of the “big idea statement” as the thesis for their exhibition.

Students then used the big idea to guide them as they chose one artifact and drafted a 200-word label for it. I looked for artifact labels that were clearly connected to the student’s big idea statement, included the context visitors would need to know to understand the artifact, and presented the student’s original interpretation of the artifact. The brevity of the assignment gave me time to provide each student with extensive written comments. In these comments and in conversations during office hours, I helped students narrow their topics, posed questions to help guide analysis and interpretation of artifacts, and suggested additional revisions focused on writing mechanics and tone.

Later in the semester, students expanded their big idea statements into rough drafts of the introductions for their digital exhibit. I asked that each introduction orient viewers to the exhibition, outline necessary historical context, and set the tone for the online visit. I also set aside part of a class period for a peer review exercise involving these drafts. I hoped that their classmates’ comments, along with my own, would help students revise their introductions before they submitted their final exhibit.

If I assigned this project again, I would probably ask students to turn in another label for a second artifact. This additional assignment would allow me to give each student more individualized feedback and would help to further clarify my grading criteria before the final project due date.

When you first taught this course a few years ago, you assigned students a more traditional task—a research paper. Can you explain why you decided to change the final assignment this time around?

I wanted to try a more flexible and creative assignment that would push students to develop research and analytical skills in a different format. The exhibit project allows students to showcase their own interpretation of a theme, put together a compelling historical narrative, and advance an argument. The project remains analytically rigorous, pushing students to think about how history is constructed. Each exhibit makes a claim—there is reasoning behind each choice the student makes when building the exhibit and each question he or she asks of the artifacts included. The format encourages students to focus on their visual analysis skills, which tend to get sidelined in favor of textual interpretation in most of the student research papers I have read. Additionally, the exhibit assignment asks students to write for a broader audience, emphasizing clarity and brevity in their written work.

What challenges did you encounter while designing this assignment from scratch?

In the past I have faced certain risks whenever I have designed a new assignment. First, I have found it difficult to strike a balance between clearly stating expectations for student work while also leaving room for students to be creative. Finding that balance was even harder with a non-traditional assignment. I knew that many of my students would not have encountered an exhibit project before my course, so I needed to clarify the utility of the project and my expectations for their submissions.

Second, I never expected to go down such a long research rabbit hole when creating the assignment directions. I naively assumed that it would be fairly simple to put together an assignment sheet outlining the requirements for the virtual museum project. I quickly learned, however, that it was difficult to describe exactly what I expected from students without diving into museum studies literature and scholarship on teaching and learning.

I also needed to find a digital platform for student projects. Did I want student projects to be accessible to the public? How much time was I willing to invest in teaching students how to navigate a program or platform? After discussing my options with Reid Sczerba in the Center for Educational Resources (CER), I eventually settled on Reveal, a Hopkins-exclusive image-annotation program. The program would keep student projects private, foreground written work, and allow for creative organization of artifacts within the digital exhibits. Additionally, I needed to determine the criteria for the written component of the assignment. I gave myself a crash course in museum writing, scouring teaching blogs, museum websites, journals on exhibition theory and practice, and books on curation for the right language for the assignment sheet. I spoke with Chesney Medical Archives Curator Natalie Elder about exhibit design and conceptualization. My research helped me understand the kind of writing I was looking for, identify models for students, and ultimately create my own exhibit to share with them.

Given all the work that this design process entailed, do you have any advice for other teachers who are thinking about trying something similar?

This experience pushed me to think about structuring assignments beyond the research paper for future courses. Instructors need to make sure that students understand the requirements for the project, develop clear standards for grading, and prepare themselves mentally for the possibility that the assignment could crash and burn. Personally, I like taking risks when I teach—coming up with new activities for each class session and adjusting in the moment should these activities fall flat—but developing a semester-long project from scratch was a big gamble.

How would you describe the students’ responses to the project? How did they react to the requirements and how do you think the final projects turned out?

I think that many students ended up enjoying the project, but responses varied at first. Students expressed frustration with the technology, saying they were not computer-savvy and were worried about having to learn a new program. I tried to reassure these students by outing myself as a millennial, promising half-jokingly that if I could learn to use it, they would find it a cinch. Unfortunately, I noticed that many students found the technology somewhat confusing despite the tutorial I delivered in class. After reading through student evaluations, I also realized that I should have weighted the final digital exhibit and presentation less heavily and included additional scaffolded assignments to minimize the end-of-semester crunch.

Despite these challenges, I was really impressed with the outcome. While clicking through the online exhibits, I could often imagine the artifacts and text set up in a physical museum space. Many students composed engaging label text, keeping their writing accessible to their imaginary museum visitors while still delivering a sophisticated interpretation of each artifact. In some cases, I found myself wishing students had prioritized deeper analysis over background information in their labels; if I assigned this project again, I would emphasize that aspect.

I learned a lot about what it means to support students through an unfamiliar semester-long project, and I’m glad they were willing to take on the challenge. I found that students appreciated the flexibility of the guidelines and the room this left for creativity. One student wrote that the project was “unique and fun, but still challenging, and let me pursue something I couldn’t have if we were just assigned a normal paper.”

If you’re interested in pursuing a project like this one and have more questions for Morgan, you can contact her at: morganjshahan@gmail.com.

For other questions or help developing new assessments to use in your courses, contact the Center for Educational Resources (cerweb@jhu.edu).

Allon Brann, Teacher Support Specialist

Center for Educational Resources

Image Source: Morgan Shahan